You can review ESXi host log files using these methods:

- From the Direct Console User Interface (DCUI). For more information, see About the Direct Console ESXi Interface in the vSphere 5.5 Installation and Setup Guide.

- From the ESXi Shell. For more information, see the Log In to the ESXi Shell section in the vSphere 5.5 Installation and Setup Guide.

- Using a web browser at

https:// HostnameOrIPAddress/host. For more information, see the HTTP Access to vSphere Server Filessection. - Within an extracted

vm-supportlog bundle. For more information, see Export System Log Files in the vSphere Monitoring and Performance Guide or Collecting diagnostic information for VMware ESX/ESXi using the vm-support command (1010705). - From the vSphere Web Client. For more information, see Viewing Log Files with the Log Browser in the vSphere Web Client in the vSphere Monitoring and Performance Guide.

ESXi Host Log Files

/var/log/auth.log: ESXi Shell authentication success and failure./var/log/dhclient.log: DHCP client service, including discovery, address lease requests and renewals./var/log/esxupdate.log: ESXi patch and update installation logs./var/log/lacp.log: Link Aggregation Control Protocol logs./var/log/hostd.log: Host management service logs, including virtual machine and host Task and Events, communication with the vSphere Client and vCenter Server vpxa agent, and SDK connections./var/log/hostd-probe.log: Host management service responsiveness checker./var/log/rhttpproxy.log: HTTP connections proxied on behalf of other ESXi host webservices./var/log/shell.log: ESXi Shell usage logs, including enable/disable and every command entered. For more information, see vSphere 5.5 Command-Line Documentation and Auditing ESXi Shell logins and commands in ESXi 5.x (2004810)./var/log/sysboot.log: Early VMkernel startup and module loading.- /var/log/boot.gz: A compressed file that contains boot log information and can be read using zcat /var/log/boot.gz|more.

/var/log/syslog.log: Management service initialization, watchdogs, scheduled tasks and DCUI use./var/log/usb.log: USB device arbitration events, such as discovery and pass-through to virtual machines./var/log/vobd.log: VMkernel Observation events, similar tovob.component.event./var/log/vmkernel.log: Core VMkernel logs, including device discovery, storage and networking device and driver events, and virtual machine startup./var/log/vmkwarning.log: A summary of Warning and Alert log messages excerpted from the VMkernel logs./var/log/vmksummary.log: A summary of ESXi host startup and shutdown, and an hourly heartbeat with uptime, number of virtual machines running, and service resource consumption. For more information, see Format of the ESXi 5.0 vmksummary log file (2004566)./var/log/Xorg.log: Video acceleration.

Note: For information on sending logs to another location (such as a datastore or remote syslog server), see Configuring syslog on ESXi 5.0 (2003322).

Logs from vCenter Server Components

When an ESXi 5.1 / 5.5 host is managed by vCenter Server 5.1 and 5.5, two components are installed, each with its own logs:

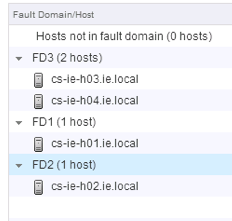

/var/log/vpxa.log: vCenter Server vpxa agent logs, including communication with vCenter Server and the Host Management hostd agent./var/log/fdm.log: vSphere High Availability logs, produced by the fdm service. For more information, see the vSphere HA Security section of the vSphere Availability Guide.